There are numerous compression tools available to Linux and Database administrators including gzip, xz, bzip2, and pigz and others. Gzip has been the classic go to for compression, however there has been a move to xz over gzip due to improvements in compression. This quick guide will show the differences in compression and time to complete specifically when dealing with mysqldump. When dumping databases, it is often a balance between total achieved compression and time it take to complete, to keep lock time to a minimal.

In this test I compared the time to complete MySQL dump with differing compress levels. The idea is to find that balance between compressing the data and getting the job done quick. If you have 50 GB of database data and only 10 GB of remaining space, you may want to use as much as compression as possible. However if you have 100 GB of data, and 1TB of free space, your priority may be to minimize locking time during the dump, so you may want less compression in favor of speed.

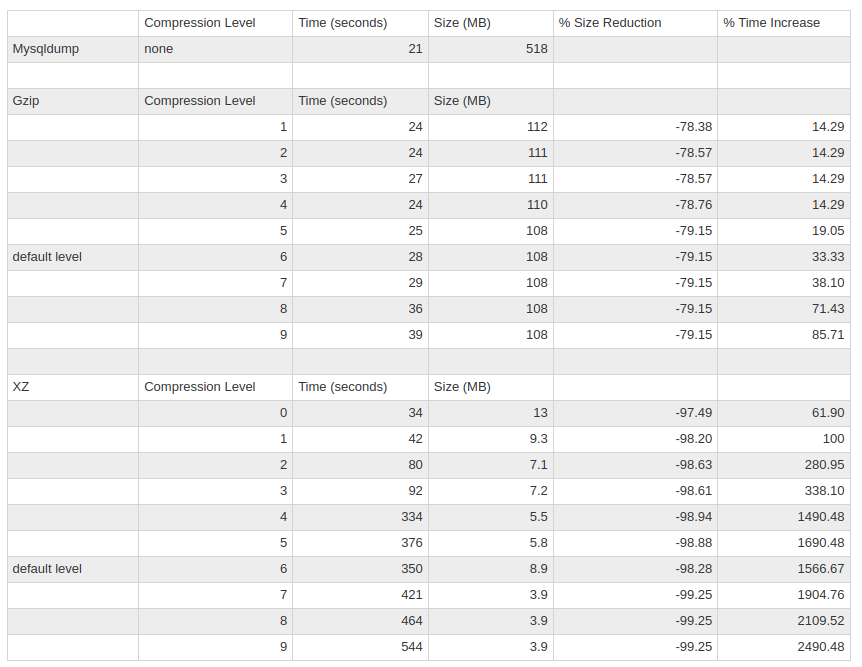

Uncompressed, the mysqldump was 518 MB and took 21 seconds to complete. So that is our baseline for our tests. The following is the command to issue our mysqldump without any compression.

time mysqldump --routines --all-databases > /root/all_databases.sql

I then issued the same dump and redirected the output to different compression levels with both gzip and xz. We are redirecting the mysqldump output directly to compression to maximize performance and reduce disk space usage. The following are examples of the default compression level for both gzip and xz.

time mysqldump --routines --all-databases | gzip -6 > /root/all_databases.sql.gz

time mysqldump --routines --all-databases | xz -6 > /root/all_databases.sql.xz

After completing the mysqldump numerous times with different compression levels, I created a chart with the results of each dump including the time it took to complete, the size of the compressed dump, and the difference in size and time to complete compared to our control with no compression.

Looking at the chart briefly, we can see the obvious pros and cons of xz. For starters, the compression level is superior to gzip. Even at the lowest level of compression with xz, the final sql dump is smaller than the highest compression level gzip. However, this comes at a pretty big cost: the time it takes to compress.

If your dumping a production database of MyISAM tables, your tables are locked during the dump. If your redirecting that dump to xz at the default compression level of 6, its possible you can see a 1000% or more increase in the time it takes to complete the dump, which is not okay in production.

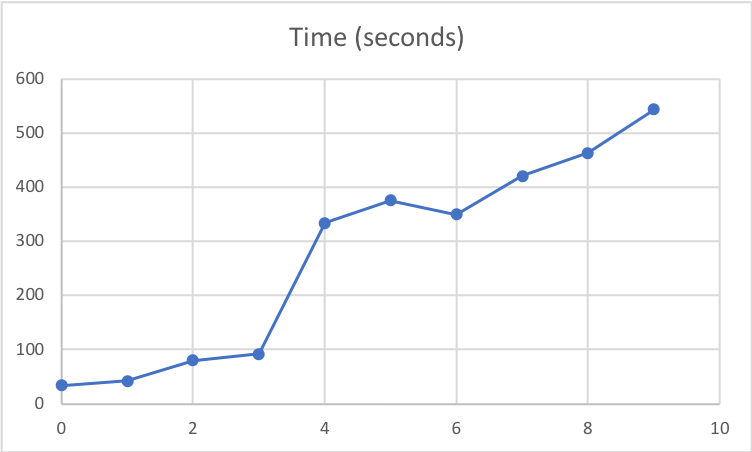

We can see that xz does offer superior compression at lower compression levels without dramatically increasing the time to completion. The following chart shows the increase in time to completion with each compression level

Conclusion

- When your in a hurry and have plenty of disk space available: gzip is your friend. Its quick and even at the lowest compression level you can see a 75% reduction in size of the dump

- When disk space is of concern: xz offers superior compression. At the lowest level of compression the final size of the dump can be more than 90% smaller while only increasing the dump time by around 60%.

Other optimizations

One area this test did not cover was further optimizations in the tools themselves to allocate more resources to the compression. Splitting the compression between multiple threads can have a great impact in the time it takes to complete. You can also allocate more memory to the compression buffers as well.

I wanted to provide a baseline comparison so you can choose the best option for the server your working on. If your dumping a large dataset on a small 2 CPU virtual machine, you may not have free resources to allocate to the compression itself. Whereas if you are on a 24 CPU dedicated server, you may be able to increase the amount of memory and CPU the compression utility is allowed to use and thus drastically reduce the time it takes to complete.

I recommend testing your options once you pick a compression level that suits your needs best. If you are creating your own backup script for your database server, run it a few times with different options to get the best backup strategy that works for your workload, as they are all different.

Considerations

One last note to keep in mind, compression size and time can be very dependent at what you are throwing at it. Your results could be very different depending on the data you are compressing. The table and results provided are not a baseline for all compression, it is looking specifically at dumping sql text and your results could be very different depending on what is in your database.